Assessments are tools to enhance and examine learning. Traditionally, assessments were considered tools of learning, meaning measurements of content acquisition. However, we suggest thinking of assessments as integral to learning processes — tools for learning.

Using a variety of assessment approaches is necessary so students with different skills and backgrounds can flourish. Middle States Higher Education Commission (2007) identifies several core assessment characteristics, including:

Formative and summative

Assessments can be formative, which are often lower-risk learning activities, e.g. formal or informal discussions about a concept. Assessments can also be summative, which happen at the end to measure overall student learning, e.g., final projects.

Direct and indirect

Assessments can be direct, which measure the extent to which a student has learned course content, e.g., quizzes, projects or essays. And assessments can be indirect, which measure how students perceive their learning environment, e.g., short reflection essays on how they approached a project.

Qualitative and quantitative

Assessments can be qualitative, which often takes the form of narrative responses and narrative-based rubrics, or quantitative, which expresses feedback through numerical data.

Offering a variety of assessments drawn from a mix of these categories will help you create meaningful opportunities for student learning and help you eliminate implicit bias in evaluation (Kieran & Anderson, 2019; Cho & Park, 2021). Some assessments might be graded; others, not graded. Some will require instructor feedback; others, peer feedback. Still others may require self-evaluation. The point of assessment is not to add to your workload burden (or theirs).

Rather, the goals of assessment are twofold:

- To give students the opportunity to demonstrate what they are learning.

- To help students develop the evaluative tools they’ll need beyond your class (Boud & Falchikov, 2007).

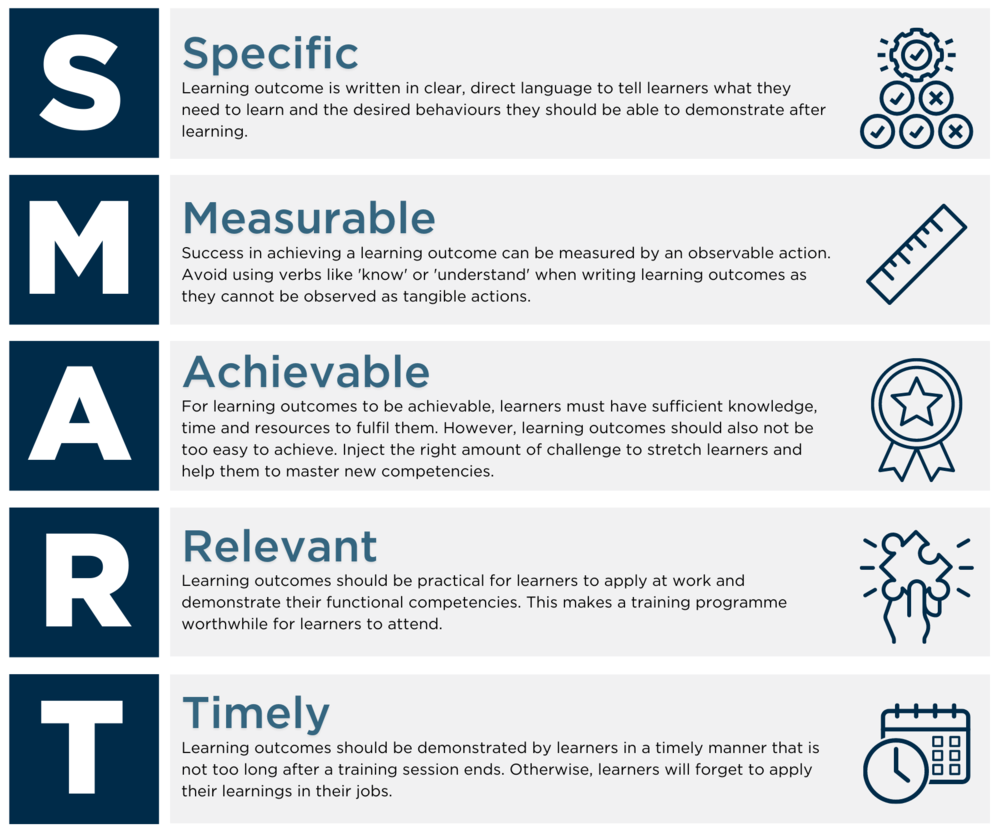

Finally, to be effective, assessments need to be SMART (Cho & Park, 2021):

- Specific

- Measurable

- Assignable

- Realistic

- Time-bound

For example:

- Not SMART: This assignment is meant to help you become a better writer.

- SMART: This five-page paper, due on March 15 at noon, will showcase your ability to produce a coherent and cohesive research-based literature review consisting of at least 20 relevant scholarly sources. (A customized rubric highlighting areas of assessment ought to be included in your directions as well.)

Remember: The point of assessment is not only to gauge how well students have learned content but also how students perceive what they have learned and how equipped they are to self-evaluate their work in your content area beyond your class (Middle States Higher Education, 2007; Boud & Falchikov, 2007).

Aligning outcomes and assessments

Helping students acquire and apply competencies is the foundation of university teaching. As you develop your coursework, consider these questions:

- What must students do to demonstrate competence?

- How can you measure their competence?

Learning outcomes are student-centered, measurable and specific statements that define the knowledge, skills and attitudes learners exhibit after instruction (enroll in our Learning Outcomes: Edujargon or Educessity sprint for additional information).

Consider taking a backward design approach to ensure your outcomes align with your assessments:

- Identify desired results that are specific and measurable (e.g., students will be able to incorporate critical theory while discussing contemporary events).

- Decide what evidence (e.g., which assessment) is needed to meet your desired outcomes (for example, “students will write an opinion article for their student newspaper on a current issue with three relevant references to critical theory”).

- Design and implement activities that will facilitate these results (see ideas below).

| Learning Outcomes | Authentic Assessment Measures |

|---|---|

| Investigate, critique, evaluate | Research paper, conference poster, OpEd, recommendation |

| Collaborate, identify multiple perspectives | Peer review, group task, critique, interview, survey |

| Demonstrate, implement, create, apply | Presentation, student teaching, experiment, case study, invention |

| Analyze, synthesize, present | Literature review or outline, research paper, conference poster, project |

| Describe, compare/contrast, critically examine | Debate, demonstration, discussion moderation, journaling |

Rubrics

Student-centered learning requires continuous, timely, objective and constructive feedback. However, most quantitative-based grading provides little to no information that helps improve or promote learning (Ragupathi & Lee, 2020). Rubrics allow instructors to combine the efficiency of quantitative assessment with qualitative feedback that helps students better understand what is being assessed and the criteria on which their grades are based. Moreover, rubrics ensure fair and consistent grading, increase student performance (Chan & Ho, 2018) and support all students (Quinn, 2020).

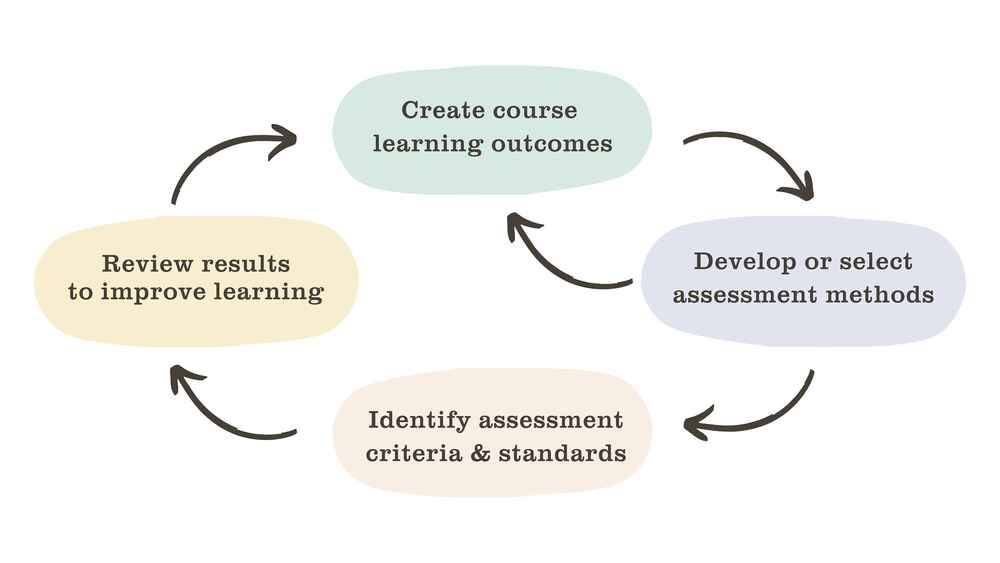

The image above — adapted from Ragupathi and Lee (2020) and Huba and Freed (2000) — outlines how rubric development fits into the course design process.

Before developing a rubric, instructors should know their intended learning outcomes and how these will be assessed. Nevertheless, it is often through creating assignments that outcomes are solidified (e.g., backwards design). This is represented by the two arrows between “Creating course learning outcomes” and “Develop or select assessment methods.”

Next, the rubric’s criteria and standards should be identified, although it’s often not necessary to build these from scratch (Ragupathi & Lee,2020). Consider reviewing examples, including AACU’s VALUE Rubrics and Rubistar’s “Search for a Rubric” function. Adapt these to meet your course’s specific needs and continue monitoring (and modifying) rubrics to ensure their reliability, validity and usability in future iterations of your class (Ragupathi & Lee 2020).

Rubrics can be used on more than large projects. Instructors have found success implementing them on everything from weekly discussion board posts to peer reviews and even capstone ePortfolios. Read Wiggins’s (2022) “The Assessment System That Made Me Love Grading Again (Yes, Really!)” for more on how one teacher implemented a revision-based grading system driven by rubric.

Alternative assessment ideas

The following alternative assessments have been adapted from 50 Alternatives to Lecture. For additional support developing active, alternative and authentic activities, enroll in our Active learning or Authentic pedagogy in the age of AI sprints.

Students can create a glossary of resources for any discipline. Using a collaborative document (e.g., Google Docs, Microsoft 365), ask students to contribute a specified number of shared references to the class. The instructor can evaluate the student on the quantity and quality of submissions as well as require students to include a summary and evaluation of each resource. If you use a spreadsheet (e.g., Google Sheets), you can create columns for different elements: citations, links to resources, summary, evaluations, student/creator names (to keep track of submissions) and other relevant notes.

Assessment qualities: Summative, direct, qualitative

Related outcomes: Collaborate, demonstrate, apply, explain

Informal debates encourage students to think critically about an issue and facilitate interactive class discussions. You can implement a debate on a discussion forum by assigning half of your students to each side of a controversial issue. A pro/con discussion often works best. Consider clarifying your objective — perhaps convincing the class (audience) rather than displaying skill in attacking the opponent. Students can develop longer initial posts and respond to two or more students on the opposite side of an issue. Flip it by asking students to take one stance in their initial post and then argue against their original opinion in their response posts. Ensure you have a detailed rubric and/or set of instructions for both original and response posts, including specified due dates that allow enough time for thoughtful replies.

Assessment qualities: Formative, indirect, qualitative

Related outcomes: Critique, compare/contrast, analyze, evaluate

Simulations create a rich environment where students actively become a part of a real-world system and function according to predetermined roles. Ask students to participate in a relevant activity done by professionals in your field. This might include simulating a United Nations Council Meeting, choosing which articles to be included in a journal, curating an exhibition or developing a lesson plan. For this to work, it’s essential to establish guidelines ahead of time: define and assign roles, set up activities, detail explanations and clarify assessments, explanations detailed and clarify assessments in advance.

Multimedia simulations can also be added to an online course to illustrate, explain, deconstruct a process, function, system, etc. Simulations may be embedded within a course website or through another online system. They can be used as part of a presentation, a component of a test or quiz or an introduction to a new topic.

Assessment qualities: Formative, indirect, qualitative

Related outcomes: Describe, apply, present

A teacher or student poses a multi-staged problem and one student after another offers one step in its solution. Consider doing this in small groups. Jeopardy-style variation: students are given a list of solutions and asked to create the problem to which it is the answer. The instructor can provide guidance on what type of problem the solution answers (e.g., a quadratic equation). Each person is responsible for adding a step to the solution or problem. This can be done on a forum or through a collaborative document (e.g., Google Doc, Microsoft 365) — just be sure to have students mark their specific additions (through color coding, adding a comment or leaving their initials).

Assessment qualities: Formative, direct, quantitative

Related outcomes: Describe, apply, present

An ancient Greek instructional technique, symposiums are discussions in which a main topic is broken into various phases. Each phase is presented in a brief and concise speech by a student who becomes an expert on a particular phase. Online, students can perfect their phase individually or in small groups through text-based discussion and assignments facilitated by the instructor and then present their finalized “speech” to the entire class through video chat or video presentation (which could take the format of a Petch Kutcha experience). Consider asking students to come up with a specified number of questions they must ask others during a Q&A time at the end of each presentation or panel.

Assessment qualities: Summative, direct, qualitative

Related outcomes: Synthesize, present, demonstrate

Create and/or analyze images or other visual content. Instructors can publish pictures, diagrams or infographics and ask students to annotate, analyze, document or describe an image. Students can create their own virtual exhibitions, complete with a “museum catalog” detailing their choices and analysis. Variation: students can take photographs, source images or develop other multimedia.

Assessment qualities: Summative, direct, qualitative

Related outcomes: Apply, create, analyze

Reflect on practices or simulations to demonstrate practical reasoning and problem-solving processes (Kandlbinder, 2007). These writing exercises can be used in tandem with practice-based activities or as stand-alone exercises. They can be used to evaluate why choices were made, not only what choices were made.

Assessment qualities: Formative, indirect, qualitative

Related outcomes: Analyze, explain, reflect

References

Boud, D., & Falchikov, N. (2007). Rethinking assessment in higher education (pp. 191-207). London: Routledge.

Charles Sturt University. (n.d.). Assessment types. Division of Teaching and Learning.

Chan, Z., & Ho, S. (2019). Good and bad practices in rubrics: The perspectives of students and educators. Assessment & Evaluation in Higher Education, 44(4), 533–545.

Cho, J., & Park, M. (2021). Culturally responsive formative assessment: Metaphors, smarter feedback, and culturally responsive stem education. International Journal of Latest Research in Humanities and Social Science, 65-73.

Huba, M. E., & Freed, J. E. (2000). Learner-centered assessment on college campuses: Shifting the focus from teaching to learning. Allyn & Bacon.

Indiana University Bloomington. (n.d.). Developing learning outcomes. Center for Innovative Teaching and Learning.

Kandlbinder, P. (2007). Writing about practice for future learning. In Rethinking Assessment in Higher Education (pp. 169-176). Routledge.

Kieran, L., & Anderson, C. (2019). Connecting universal design for learning with culturally responsive teaching. Education and Urban Society, 51(9), 1202-1216.

March, L. (2020). 50 alternatives to lecture.

Middle States Commission on Higher Education. (2007). Student learning assessment: Options and resources, 2nd ed.

Quinn, D. M. (2020). Experimental evidence on teachers’ racial bias in student evaluation: The role of grading scales. Educational Evaluation and Policy Analysis, 42(3), 375–392.

Sanger, C. S., & Gleason, N. W. (Eds.). (2020). Diversity and inclusion in global higher education: Lessons from across Asia. Springer Singapore.